TiDB (NewSQL) Tutorial

TiDB (NewSQL) tutorial

TiDB is a distributed SQL database. Inspired by the design of Google F1.

TiDB supports the best features of both traditional RDBMS and NoSQL.

TiKV is a distributed Key-Value database powered by Rust and Raft.

注:

TiDB 是有 PingCAP 公司出品的一款分布式SQL数据库. 具有很多优秀的特性, 且完全开源.

目前还没有Production Ready. 不过应该很快就会有GA. 本文为我们对TiDB做的一个初步安装部署,

测试一下相关的特性.希望本文可以帮到那些想尝尝鲜的同学.让你避免一些坑.

KeyWord

- The Raft Consensus Algorithm

- Distributed transactions, Two-phase commit protocol

- RocksDB

Newest Documents

1 | https://download.pingcap.org/tidb-ansible-doc-cn-1.0-dev.pdf |

Features :

+ Horizontal scalability

+ Asynchronous schema changes

+ Consistent distributed transactions

+ Compatible with MySQL protocol

+ Multiple storage engine support

+ NewSQL over TiKV

+ Written in Go

Architecture

- Cluster Level of TiDB :

- TiDB cluster consists of TiDB Servers , TiKV storage, PD cluster

- TiDB is the SQL layer and Compatible with MySQL protocol

- TiKV is the Storage , where the data really was

- PD is used to manage and schedule the TiKV cluster. MetaData is here

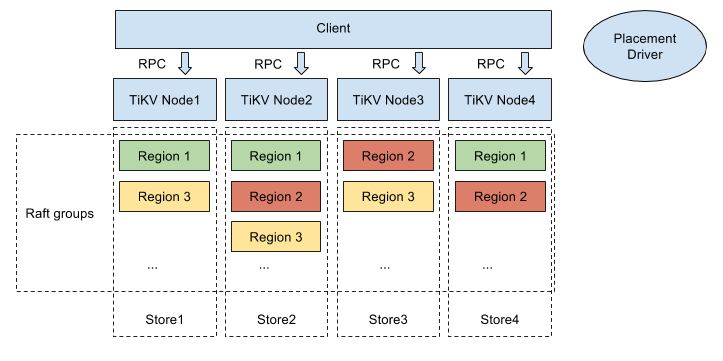

- TiKV

- TiKV Cluster consists serveral Nodes

- One Rocksdb in one Node

- Data store in Region , region size 64M(can set other value)

- Data consistency guarantee through Raft consensus algorithm. One Raft group contain 3(configurable) regions

- Multi-raft protocol used in TiKV

Software Installation

TiDB , PD developed in Go

## Install Go 1.7.x ## TiDB git clone https://github.com/pingcap/tidb.git $GOPATH/src/github.com/pingcap/tidb cd $GOPATH/src/github.com/pingcap/tidb make ## PD git clone https://github.com/pingcap/pd.git $GOPATH/src/github.com/pingcap/pd make buildRocksDB

RocksDB must be installed before the TiKV’s Installation, or the Tikv can not be installed successfully.

Using this docs to installwget source_url() # install gflag & snapy & lz4 yum install -y snappy-devel zlib-devel bzip2-devel lz4-devel make shared_lib make install-shared # guarantee tikv can find rocksdb. ldconfigCareful:RocksDB’s Version >= 4.12 , master version is OK

GCC 4.8 is perfect , 4.7 may meet some error like this:

see also:C++11 ‘yield’ is not a member of ‘std::this_thread’

Install GCC 4.8 on Centos 7.x

1 | # 48 |

TIKV developed in Rust

Install rust (nightly) 1

2

3

4

5

6

7curl -sSf https://static.rust-lang.org/rustup.sh | sh -s -- --channel=nightly

## or download the tarball

## dowload the source code

git clone https://github.com/pingcap/tikv.git /root/tikv

cd /root/tikv

## build

make && make installCareful:1

2if you met some error in the first time . when you do it again ,

you should do clean first ( cargo clean )

Make a TiDB Cluster

After we install the software we needed , Now we will make A cluster with three nodes.

According to the document of making Cluster , The start-up sequence is PD -> TiKV -> TiDB.

Host lists

xx0.x2x.8x.64

xx0.x2x.8x.65

xx0.x2x.8x.66

Start PD cluster

1 | ./pd-server --cluster-id=1 \ |

Start Tikv Cluster

## execute the following command on everynode

./tikv-server --config /root/tidb/tikv/etc/tikv.toml --log-file=/tmp/tikv.log &

Start TiDB Server

Using the following command to start One TiDB server

1 | ## execute |

you can start one tidb on every node .

Now you can using TiDB like using MySQL

mysql -h xx0.x2x.8x.64 -P 4000 -u root -D test

Summary

整个过程中, 也遇到一些问题 和 Bug 也都提交了issue. 其他内容,等我们测试评估之后再来写吧.